Linux 驱动之基础(二)DMA-API

😊 There are two types of DMA mappings

Consistent DM(硬件保证 cache 一致性) mappings which are usually mapped at driver initialization, unmapped at the end and for which the hardware should guarantee that the device and the CPU can access the data in parallel and will see updates made by each other without any explicit software flushing.

Streaming DMA(需要软件来维护 cache 一致性) mappings which are usually mapped for one DMA transfer, unmapped right after it (unless you use dma_sync_* below) and for which hardware can optimize for sequential accesses.

😄 DMA Direction:

The interfaces described in subsequent portions of this document take a DMA direction argument, which is an integer and takes on one of the following values:

- DMA_BIDIRECTIONAL

- DMA_TO_DEVICE

- DMA_FROM_DEVICE

- DMA_NONE

You should provide the exact DMA direction if you know it.

DMA_TO_DEVICE means "from main memory to the device". DMA_FROM_DEVICE means "from the device to main memory". It is the direction in which the data moves during the DMA transfer.

You are strongly encouraged to specify this as precisely as you possibly can.

If you absolutely cannot know the direction of the DMA transfer, specify DMA_BIDIRECTIONAL. It means that the DMA can go in either direction. The platform guarantees that you may legally specify this, and that it will work, but this may be at the cost of performance for example.

The value DMA_NONE is to be used for debugging(DMA_NONE 用于调试). One can hold this in a data structure before you come to know the precise direction, and this will help catch cases where your direction tracking logic has failed to set things up properly.

Another advantage of specifying this value precisely (outside of potential platform-specific optimizations of such) is for debugging. Some platforms actually have a write permission boolean which DMA mappings can be marked with, much like page protections in the user program address space. Such platforms can and do report errors in the kernel logs when the DMA controller hardware detects violation of the permission setting.

Only streaming mappings specify a direction, consistent mappings implicitly have a direction attribute setting of DMA_BIDIRECTIONAL.

For Networking drivers, it's a rather simple affair. For transmit packets, map/unmap them with the DMA_TO_DEVICE direction specifier. For receive packets, just the opposite, map/unmap them with the DMA_FROM_DEVICE direction specifier.

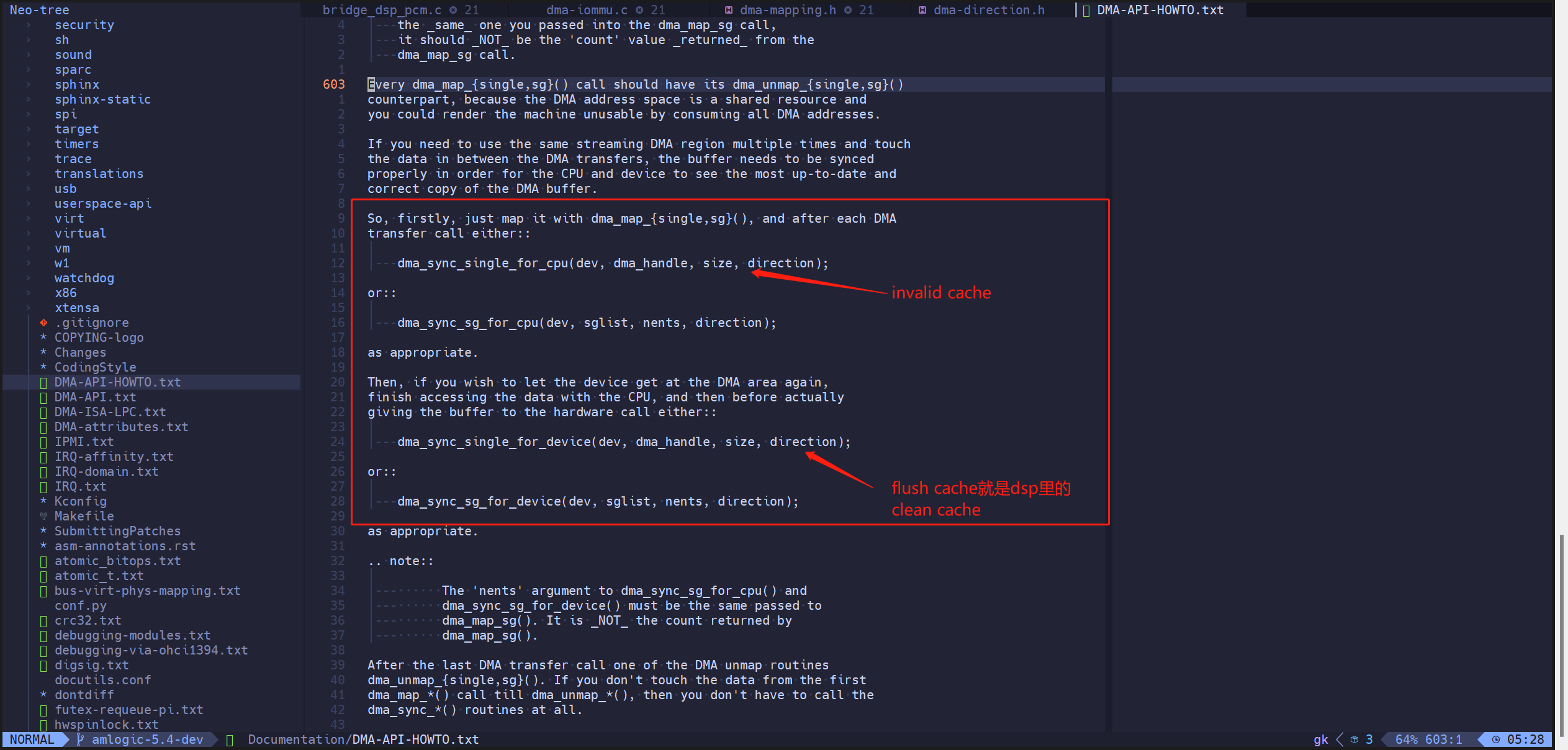

😋 dma_sync_single_for_device/cpu API

调用栈:

1 | dma_sync_single_for_device/cpu |

😜 对于 Linux upstream 社区

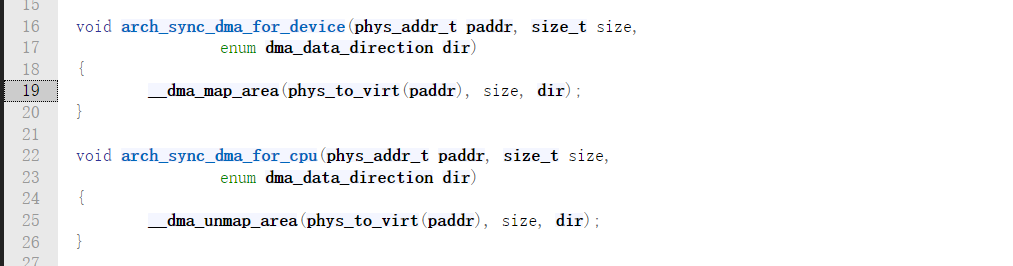

kernel 5.x

arch_sync_dma_for_device 全部执行 clean cache arch_sync_dma_for_device 如果 direction 是 DMA_TO_DEVICE 则什么也不做否则执行 invalid cache

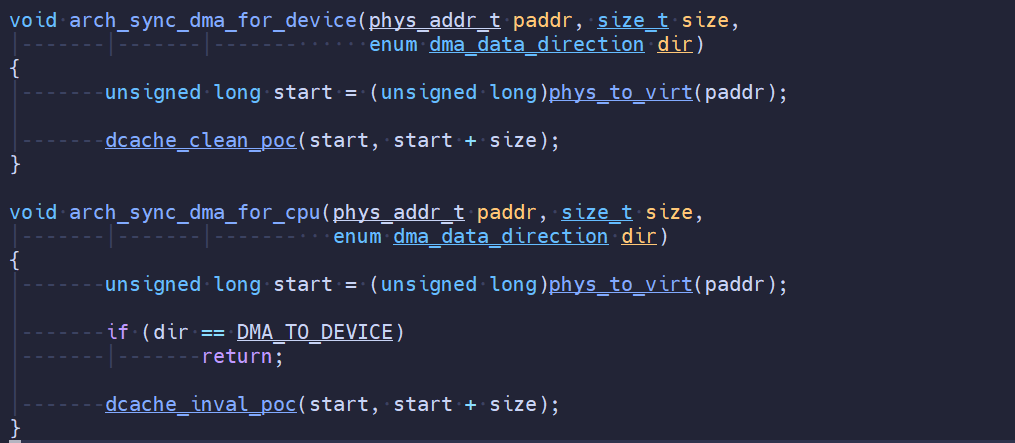

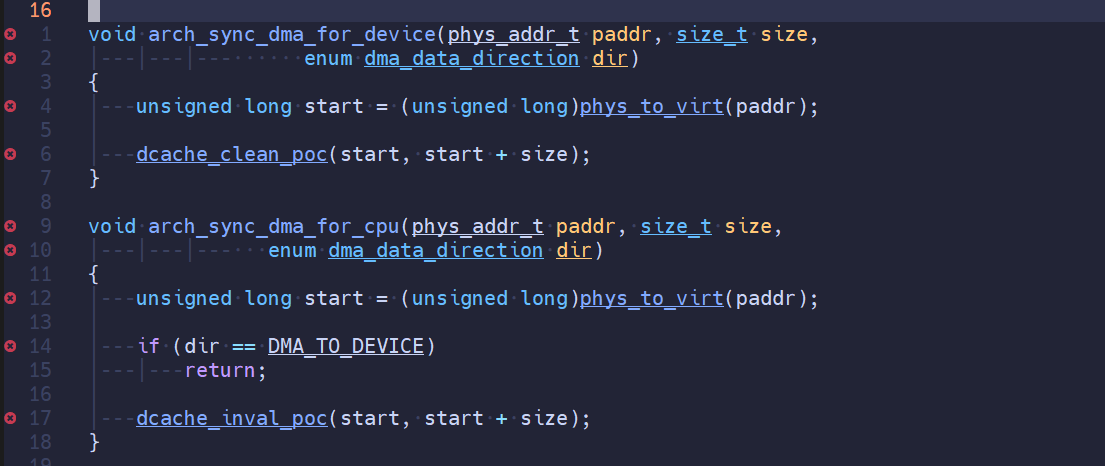

kernel 6.x

在 arch_sync_dma_for_device 中 direction 被忽略掉,执行 dcache_clean_poc(clean cache) 在 arch_sync_dma_for_cpu 中 dir 为 DMA_TO_DEVICE 什么也不做直接返回,否则执行 dcache_inval_poc(invalid cache)

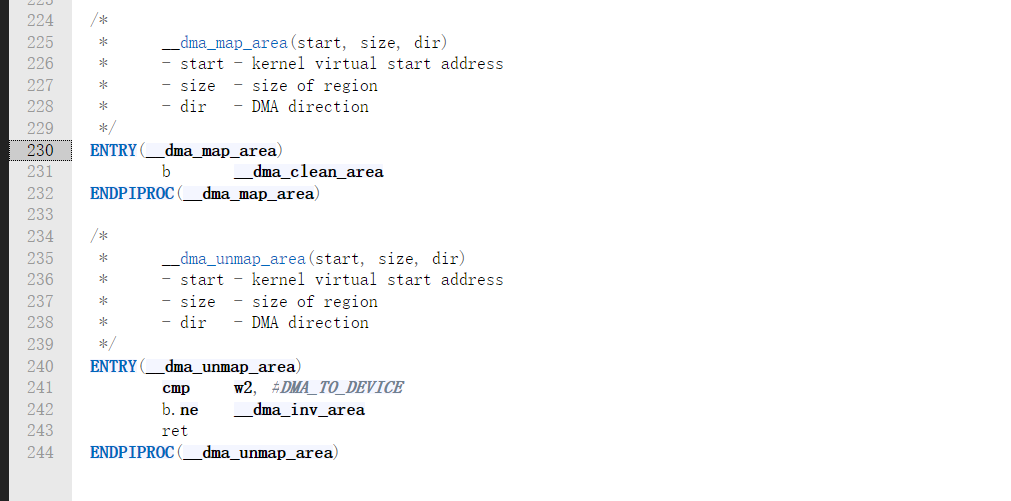

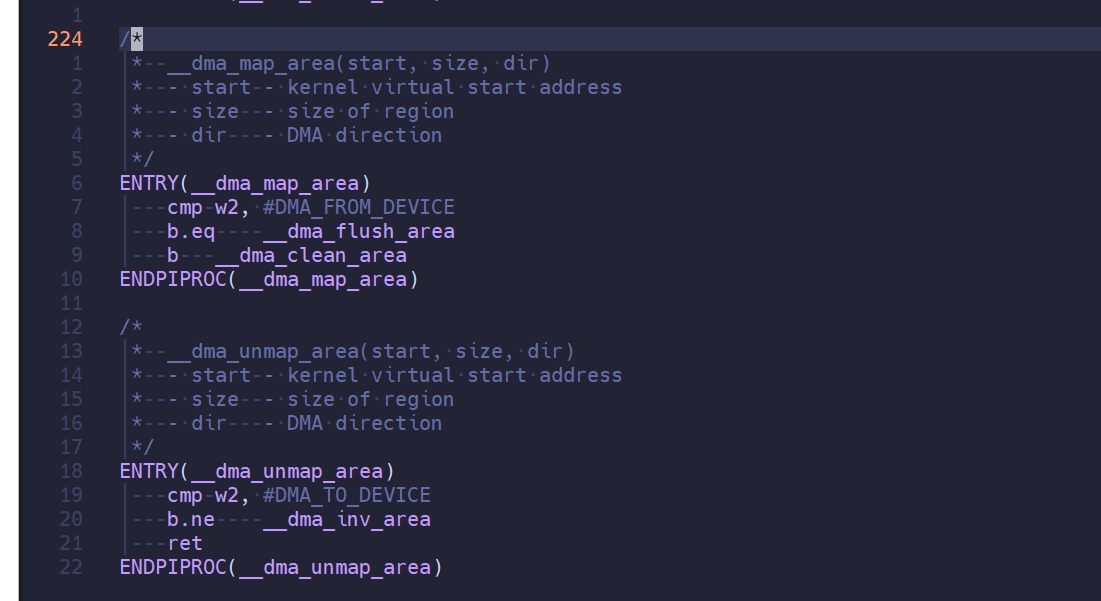

对于 Google 社区

kernel 5.x

__dma_map_area(): 如果 direction 是 DMA_FROM_DEVICE 则先执行一个

fluah 操作,然后执行 clean cache,如果 direction 不是 DMA_FROM_DEVICE

则只执行 clean cache __dma_unmap_area(): 如果 direction 等于

DMA_TO_DEVICE 什么也不做否则执行__dma_inv_area(invalid cache)

kernel 6.x

for_device 忽略 direction、全部 direction 执行 clean cache for_cpu

忽略 DMA_TO_DEVICE, 其他 direction 执行 invalid cache

总结

Linux upstream

kernel 5.x:

dma_sync_single_for_device: 忽略 direction 全部执行 clean

cache

dma_sync_single_for_cpu: 如果 direction 是 DMA_TO_DEVICE

则什么也不做,否则执行 invalid cache

kernel 6.x:

同 kernel 5.x 逻辑一致,只是改了下 api 名称

Google 社区

kernel 5.x:

dma_sync_single_for_device: 如果 direction 是 DMA_FROM_DEVICE

则多执行一个 flush cache,全部 direction 都会执行 clean cache

dma_sync_single_for_cpu: 如果 direction 是 DMA_TO_DEVICE

则什么也不做,否则执行 invalid cache(同 linux upstream 逻辑一致)

kernel 6.x:

同 linux upstream 逻辑一致

dma_sync_single_for_device: 忽略 direction 全部执行 clean

cache

dma_sync_single_for_cpu: 如果 direction 是 DMA_TO_DEVICE

则什么也不做,否则执行 invalid cache

🍦 QA

Q: 在 cache 和 ddr 中的数据一致后,DMA 将 device

数据搬运到 ddr 后(cache 与 ddr 不一样)如果调用 flush 接口,flush 先做

clean 操作会不会将 cache 中的数据写回 DDR.

A: 不会。clean 操作是检查 dirty

标志位,在硬件改写过程中 dirty 标志位一直是 0(非 dirty),所以此时

flush 操作中的 clean 操作只是检查一遍 dirty bit, 之后做 invalid

操作。

🍭 参考文献

《Linux Document》

预览: